The Future of Media: Fewer, Bigger Hits, An Even Longer Tail, No Middle and Lower Returns

Two frameworks show where we’re headed

[Note that this essay was originally published on Medium]

“It may be highly unpredictable where a traveler will be one hour after the start of her journey, yet predictable that after five hours she will be at her destination. The very long-term future…may be relatively easy to predict.” Nick Bostrum

I was recently asked to speak on a panel called “The Future of Media.” The title was a little tongue-in-cheek; we didn’t really expect to do any justice to the future of media in 45 minutes. But it seemed like a good reason to organize some long-gestating thoughts on, well, the future of media or, more precisely, the business of producing and distributing media.

The rolling disruption of media over the last two decades, of newspapers, music, magazines, radio, home video, TV and theatrical — roughly in that order — is old news. It happened because digitization lowered barriers to entry, new entrants flooded in, consumer choice exploded and attention fragmented. Seems pretty straightforward. But there are still important questions, with tens of billion of dollars in revenue and hundreds of billions of market cap hanging in the balance. Some media have proved more resilient than others, like national TV (about $100 billion in advertising and affiliate fees in the U.S. last year and still growing) and theatrical films (>$10 billion in domestic box office, which has held about steady for a decade, and billions more in downstream licensing revenue). Why is that? Do they have inherent structural advantages? Did they learn enough from watching the disruption of other media, like newspapers, magazines and music, to head off the worst effects? Local media has not been resilient, but it has been surprisingly persistent. Terrestrial radio generated over $10 billion in ad revenues in the U.S. last year and even the beleaguered newspaper business generated about $20 billion in total revenue domestically. Is local media stabilizing or will declines accelerate from here? More broadly, will media continue to fragment into the tail, as mass culture gives way to a near-infinite number of individual tastes and voices? Do hits matter anymore? How will a generation weaned on Fortnite and TikTok consume media in a decade or two?

No one has a precise answer to any of these questions. But frameworks can help. A good framework provides a language to describe phenomena; the perspective to weave together seemingly disparate observations and recognize patterns over time and across domains; the insight to focus on the most important factors; and, maybe, the tools to map the probability distribution of possible outcomes. To try to add perspective to what’s happened in media, and insight into what will likely happen next, I will lean on two frameworks: Clay Christensen’s theory of disruptive innovation and Chris Anderson’s theory of the long tail.

If you want the tl;dr version, here it is: The future state of media seems clear, even if the timing does not. On the supply side, every form of media is being disrupted in a similar way. There are structural reasons why some media have been more resilient than others, but these will only reduce the speed and scope of disruption, they won’t prevent it. On the demand side, the customer definition of “quality” is shifting, causing consumers to adopt a barbell approach, gravitating to the biggest hits, on one end, and to the tail of authentic, relevant, viral, personal content, on the other. Taken together, these supply side and demand side dynamics will continue to reduce the aggregate profit pools of producing and distributing media and shift value instead to consumers, talent, rights owners and aggregators. The “future of media” is a thinner, taller head of fewer, bigger hits, no middle, a much longer tail and, for producers and distributors of content, lower profits.

Media is a Poster Child for Disruption

Anyone reading this is probably familiar with at least a sketch of Christensen’s model of disruptive innovation: a new entrant enters a market with a lower-priced, lower-performance product that is “good enough” for low-end customers; the incumbents ignore, dismiss or wish away the threat, ceding the low end of the market; and the new entrant gains a foothold and systematically improves performance, moves upmarket and picks off more demanding tiers of customers, until the incumbent is toast. It is primarily a model of supply side dynamics, namely the competitive response when a new entrant, usually armed with new technology and a new business model, enters an established market. To apply the model to media it will be helpful to dig in a little deeper.

Two Types of Disruption

Perhaps less well understood is that there are two types of disruption, which Christensen first laid out in his classic The Innovator’s Solution: low-end disruption and new-market disruption. Low end disruption is characterized by a new entrant attracting existing low-end customers with a new product or service at a lower price and lower performance along the traditional bases of competition. New-market disruptions occur when new entrants attract new consumers, and, perhaps more important, provide a new combination of performance features that are initially less valuable to traditional, high-end customers. As these new performance features improve, if they prove valuable to traditional customers, they change the bases of competition or, stated another way, they change the customer definition of quality. This idea is shown in Figure 1. Each plane represents a different “value network” –the traditional value network and the new one –which are defined by how customers define quality. The new entrant systematically moves upmarket in the traditional value network and, if its new features are sufficiently valuable, it will pull traditional customers into the new value network. The incumbents are generally hard pressed to match this combination of new performance features because they are structurally unable to do so or, by the time they get around to trying, it’s too late.

It is hard to think of many examples of disruption that aren’t a combination of both types. When AirBNB emerged it was both low-end (less expensive than hotels, with much lower performance along traditional bases of competition, like trust and convenience), but also new-market (it attracted people who couldn’t afford to stay in hotels and offered a whole range of new features, like the ability to see much more detailed information on the space, more room for the money, more privacy, the ability to stay in non-touristy neighborhoods and new amenities, like a kitchen or bunk beds). If they wanted to, incumbent hotel chains could theoretically compete with AirBNB on price, but they have no earthly way to move their hotels into quaint, authentic neighborhoods or jam full-service kitchens into their rooms.

Figure 1. Low End and New Market Disruption

Source: Adapted from The Innovator’s Solution.

Folding in the Language of Jobs Theory

In Solution, Christensen also introduced what he called “jobs-to-be-done” theory, or just jobs theory (an idea he adapted from elsewhere), which adds some useful language to the disruption framework. (Ultimately, he dedicated an entire book to the topic, Competing Against Luck.) The idea is that people don’t buy products because they happen to fall into some traditional category of customer segmentation, like age, gender, or whatever, but rather people “hire” successful products to accomplish a “job” that they are seeking to do in a certain circumstance. They “fire” existing solutions when a sufficiently better one comes along. Most products do a variety of jobs, which have varying importance to different customers. For instance, a car might fulfill the job of transporting you quickly, it might be spacious enough to carry a large family, it might pull cargo, it might be a mobile workplace, it might provide safety or comfort and it might send a message about your social status or self-image. Each of these jobs is a different basis of competition. The most successful companies have an intimate understanding of the jobs they are being hired to do and tailor the entire customer experience around those jobs. Often customers have jobs that no existing product can suitably do; these unmet jobs are potentially big opportunities.

What Determines the Speed and Degree of Disruption?

The disruptive process has played out umpteen times, such as the way mini-mills disrupted integrated steel mills (Christensen’s canonical example in The Innovator’s Dilemma); digital photography disrupted film photography; angioplasty disrupted cardiac bypass surgery; PCs disrupted mainframes; mobile disrupted PCs; low-cost airlines disrupted traditional air travel; Uber and Lyft disrupted the limousine business, and so on. One topic that Christensen never discussed much was the factors that determine the speed and degree of disruption. It took decades for mini-mills to disrupt integrated steel mills; Waze and Google Maps disrupted standalone GPS navigation devices like Garmin and TomTom seemingly overnight. Some disrupted industries essentially go away (film photography), while others linger indefinitely (like much of “old” media). So, what determines the difference?

From observation, there are three things that determine the speed and degree of disruption: the barriers for new entrants to move upmarket and satisfy traditional customers’ most demanding jobs; the relative importance to customers of the new jobs new entrants do (and, by extension, the degree to which these new jobs change the definition of quality); and customer switching costs.

The barriers for the insurgent to move upmarket. Most disruptive innovations are facilitated by a disruptive technology that commoditizes one or more jobs the incumbent does. The relative importance of the jobs that are commoditized — and the jobs that are not — will determine the pace and degree of disruption. Consider online education. Ubiquitous high-bandwidth Internet access has commoditized one of the most important jobs of higher education: imparting knowledge. However, higher education also does other jobs, such as provide young adults a structured transition from dependence to independence (or maybe back to dependence when they move back into the basement), enable students to form new and lasting relationships, provide an alumni network and signal prospective employers. None of those have been commoditized by Coursera or Udacity yet and, so far at least, they remain important jobs. That may be why higher education has not yet been disrupted to the degree many expected.

The relative importance of the new jobs the upstart does. The prior point pertained to what degree the insurgent commoditizes the most demanding jobs the incumbent already does. This point pertains to the perceived value of the insurgent’s new jobs. As mentioned above, most disruptive entrants also do jobs that the incumbents don’t do. If those are sufficiently important to customers, they change the customer definition of quality; they change what people consider when “hiring” and “firing” products. Take GPS again. Driving navigation apps, like Waze or Google Maps, are somewhat less reliable than standalone GPS devices because they can lose their signal. But because they crowdsource traffic information from the network, when they emerged they also offered to do a new job that GPS devices didn’t do: “enable me to circumvent traffic.” That job was so important that it changed the basis of competition almost overnight.

Consumer switching costs. Switching costs determine how easy it is for customers to “fire” the existing solution. The easier it is, the faster the disruption. Switching costs could be sunk investment in equipment or knowledge; or transactional costs to move from the incumbent’s to the insurgent’s solution, otherwise known as customer inertia. Keep in mind that switching costs are relative. Customers are always implicitly weighing the cost-benefit tradeoff of incurring a switching cost and both the cost and benefit may change as circumstances change.

Lessons from Disruption Theory for Media

With that background out of the way, let’s apply this to the media business. One helpful adjustment is to segment customers not into groups of discrete humans, but by the jobs they are trying to do in a given circumstance. One subscriber of the local newspaper, for instance, might sometimes pick up the paper to see the syndicated national news, sometimes the local sports, sometimes the classifieds, sometimes the movie listings (in 1985, that is). So, the relevant customer segmentation might be “syndicated national news reader,” “crossword enthusiast,” “local sports reader,” or “classifieds reader,” with some people falling into multiple categories. Another related adjustment is to recognize that since many media companies have traditionally sought to do many different jobs for consumers, many faced numerous disruptive entrants, all attacking different jobs. Sticking with local newspapers, they’ve faced off against Craigslist, Ebay and CareerBuilder all targeting different facets of classifieds users; Facebook, Patch and Nextdoor targeting hyperlocal news readers; Facebook, Google and Yahoo targeting national syndicated news readers; The Athletic targeting local sports fans, etc.

Lesson 1: A Similar Pattern of Disruption Across Media

The simplest, and probably most self-evident, lesson of trying to apply this model to media is that it works. Sure, the details and timing differ. Music was disrupted because the labels were overwhelmed by piracy (due to the advent of Napster and Bit Torrent) and struck a lesser-of-two evils deal to unbundle albums on iTunes. Newspapers, probably more so than any other media, faced (and face) a multi-front war. TV networks sowed the seeds of their own disruption by glibly viewing streaming video providers (like Netflix) as another monetization window and licensing their best content to the disrupters. And some of the current “insurgents” in TV happen to be some of the largest, best capitalized companies in the world, which don’t actually need to make a profit in the TV business (an idea Christensen didn’t contemplate: competitors who don’t need to make money!). Nevertheless, newspapers, magazines, music, local TV, radio, home video, national TV and theatrical were (or are being) disrupted in essentially the same way. In all cases, a new entrant emerged, armed with a new business model, and offered a product that was generally cheaper, provided lower performance than the incumbents along the traditional bases of competition, and introduced new features — and the incumbents were unable to fend off the attack.

Figure 2, for instance, shows how the model applies to TV, something near and dear to my heart. I worked in a few roles at Time Warner from 2007 to 2018, including heading investor relations and running strategy at Turner, so I watched all this play out like a very slow-motion car crash. As shown, Netflix, as a proxy for streaming video, started out with a relatively limited slate of low-production value content (or at least older content), but rapidly improved the breadth and production value of its programming to first satisfy the requirements of kids and viewers of syndicated shows (re-runs), then moved upmarket to viewers of unscripted shows (reality TV) and finally to viewers of high-quality scripted originals. YouTube, as a proxy for user-generated content (UGC) and lower-cost professionally produced programming, followed a similar path, first competing vigorously for kids’ attention and for viewers of some types of unscripted content, but it has not yet broached the scripted market. The traditional broadcast and cable networks, in the diagram represented by ABC and TNT, improved performance along the traditional basis of competition — production quality — by investing more in original productions. This only overserved consumers with far more original content than they could ever watch (some 500 scripted series annually across TV, at last count) and failed to stave off the attack from below.

Figure 2. TV Fits the Disruption Model

Source: Author analysis.

Lesson 2. The Most Insulated Media Have Been Protected by the High Cost of High “Quality”

Even though the pattern of disruption has been very similar, some media have proved much more resilient than others. Why is that? As mentioned above, one of the determinants of the speed and degree of disruption is the barriers for new entrants to move upmarket, which is a function of the degree to which new technologies commoditize the most demanding jobs that incumbents do.

The most demanding jobs that media companies do are produce and market high-production value content, and those jobs have not been commoditized.

The primary technological innovations that enabled disruption in media were the combination of digitization, ubiquitous accessibility through the Internet, improved compression, cheaper storage and higher-throughput broadband infrastructure (all but the last of which were propelled by Moore’s Law). This suite of technologies reduced the marginal cost of distribution of media to essentially zero and simultaneously eroded some of the historical physical and regulatory barriers to entry in many media. (For instance, local TV and radio stations were historically protected by limited spectrum availability in any given market and the capital investment in broadcasting facilities; local newspapers were similarly insulated by the high capital requirements for printing plants and physical newspaper distribution. The ubiquity of Internet access and the fact that Internet publishers don’t pay for the underlying distribution infrastructure eliminated these entry barriers.)

By contrast, the cost to produce (and market) high-production value content went up. As venture capitalist Bill Gurley used to say, “backhoes don’t obey Moore’s Law.” Neither do lunches at The Ivy or Superbowl ads. So, while some costs of content production fell — facilitated by cheaper production equipment and more powerful and cost-efficient post-production tools and content management systems — the most expensive costs, namely talent, rights and marketing, did not. Instead, they have risen as the competition for content and consumer attention has increased. So, while the cost to distribute content has plummeted, the cost to produce high-production value content has risen.

And it’s not just that producing high-production value content is expensive, it’s also just hard to do well. It is supported by decades of institutional experience; a tightly connected ecosystem of talent (actors, directors, writers), agents, managers, external and in-house producers, and studio/label/editorial executives; and a long-held “way of doing things.” Money is still the main currency, but status and relationships follow closely behind. All of which is to say that it is not easily commoditized.

It is telling, for instance, that music retailing — which was entirely a distribution business — was upended early and entirely, but the music labels, which make large marketing investments, have deeply entrenched relationships and manage the very complicated flow of rights contracts and payments, among other things, have only consolidated their power. (Of course, the labels’ economics were squeezed as the distribution model changed and revenue fell industry-wide, but they’re alive and well and reaping the benefits of the industry’s return to growth; Tower Records is not.)

This explains why relatively high-production value, high content-cost verticals like theatrical films, national broadcast and cable networks and national newspapers have been better insulated and taken longer to disrupt than local media businesses with lower production values and lower content and marketing spend.

So, the good news for some of the most resilient media is that they have proved resilient for a reason and their competitive moats will continue to erode only slowly. The bad news is that they will erode. As new entrants gain scale and resources, they are increasingly able to compete for talent, rights and consumer attention, which ultimately pressures returns and steadily chips away at the incumbents’ market share. This idea is hardly a newsflash for anyone watching the TV and film sector with even half an eye; last year, reportedly Netflix spent $15 billion on content, Amazon spent more than $5 billion and Apple announced intentions to spend $6 billion (albeit over an indeterminate time frame). But looking at this rapid increase in spending through the lens of disruption theory illustrates that it’s not just the barbarians at the gates; it’s evidence of the later stages of the disruption process that has played out everywhere else across media, the effort to satisfy the most demanding customers. The relative resilience of some media is a matter of degree, not kind.

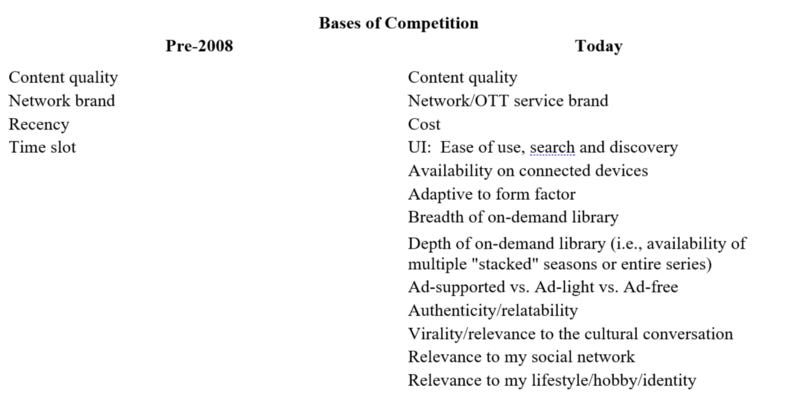

Figure 3. The Bases of Competition Has Changed Radically for TV

Source: Author analysis.

Lesson 3. The Consumer Definition of Quality is Changing

The other bad news, even for the most resilient incumbents, is that the customer definition of quality is changing. Traditional media executives don’t need to be convinced that they’re being disrupted. They live it everyday. But it is harder for many of them to grasp that their definition of quality is increasingly at odds with consumers’ evolving definition. But whether they appreciate it or not, the most important job they do is not as important as it used to be.

Again, TV is a good example. Prior to the advent of Netflix, the experience of watching TV was uniform along most dimensions. You subscribed to a pretty rigid bundle of networks, watched when shows were aired, accessed them through a similarly-crappy interactive program guide, suffered through roughly the same number of ads per hour, etc. So, the primary basis of competition in choosing a TV show was the consumer perception of “production value,” which really means how well the programming did the job a consumer “hired” it do (make me laugh, feel suspense, feel superior to someone, be relevant around the water cooler, etc.) After the advent of over-the-top video, specifically Netflix and YouTube, consumer choice exploded along multiple dimensions, as shown in Figure 3. Now the only question is not the production value of the content, but: Is the UI intuitive and easy to navigate? Are there ads? How many episodes or seasons can I access? Is it on my Roku? Is the content authentic or viral or relevant to my sub-community?

New bases of competition will likely continue to emerge, continually changing the definition of quality. For a generation weaned on Fortnite, interactivity, quality of gameplay and the ability to watch/play with your friends are becoming increasingly important. (The popularity of the Google Chrome extension Netflix Party is an early signal of this.) Should VR/MR/AR ever take off, “immersiveness” could become yet another dimension.

The implications of the changing bases of competition are twofold:

It erodes the relative importance of the prior bases of competition and may therefore further erode traditional entry barriers. Let’s stick with TV. As mentioned, it is expensive and difficult to make high production value content. Many of these new dimensions of quality, however, have much lower barriers to entry. To the extent that consumers care less about content quality as it was once defined — big, name-brand talent, expensive rights and high production values — then incumbents’ competitive positions will be further eroded.

The incumbents are generally unable to keep up. As mentioned above, the incumbents often don’t have the will, flexibility or skillsets necessary to match the insurgents’ suite of new features (or jobs). Their interlocking activity systems, organizational structures, cultures and incentives are oriented to their current priorities, making it very hard, if not almost impossible, to adjust. Staying with the TV theme: Netflix, at its heart, is a data and product company. It has thousands of engineers, probably one out of every four employees, and runs thousands of A/B tests per year in an effort to continually fine-tune the user experience. As has been well documented elsewhere, it is very hard for traditional TV companies, many of which are historically wholesalers, to compete in a direct-to-consumer world.

Lesson 4. Switching Costs Matter, But Only So Much

The last key determinant of the pace and degree of disruption is the degree of consumer switching costs or inertia. For some media, these tend to be very low. It’s easy to switch to a new radio station, podcast or playlist. Sometimes switching costs constitute sunk investments in hardware and software — like for gaming consoles and games. Transactional costs associated with subscription relationships can also create very high consumer inertia. As of 2015, 2 million people still paid for AOL dial up. And 90 million+ households still pay for a “cable” subscription. Nevertheless, as mentioned above, customers are always implicitly weighing the cost-benefit analysis of incurring switching costs and this calculus changes as circumstances change. Switching costs tied to sunk investments in equipment, for instance, are influenced by the length of equipment upgrade cycles; when the new generation of game consoles comes out, you eventually need to upgrade anyway. Similarly, the switching costs to cancel cable or satellite service are often high — you either need to call for a truck roll or drop off the set tops yourself — but these also go to zero every time someone moves homes. And while many people might consider it too big a pain to cancel cable service under normal macroeconomic circumstances, if we enter a prolonged recession more consumers are likely to choose to incur that cost.

Next, I’ll layer on another framework, the theory of the long tail.

Another Angle: The Long Tail

In 2004, Chris Anderson wrote an article for Wired called The Long Tail, followed by a similarly-titled book a couple of years later (always a book!). Whereas Christensen’s disruption theory is primarily a model of supply side dynamics, the long tail is primarily a model of demand side dynamics — namely how consumers behave when confronted with vastly more choice. Disruption theory is very good theory; it has stood the test of time and, while not perfect, has strong descriptive and predictive power. The long tail is not good theory. It is a good idea that was not explored rigorously at the time and it hasn’t held up as well. However, it captured some important truths about the economics of information goods and it provides another useful angle for figuring out what’s happening in media today.

The Long Tail in a Nutshell

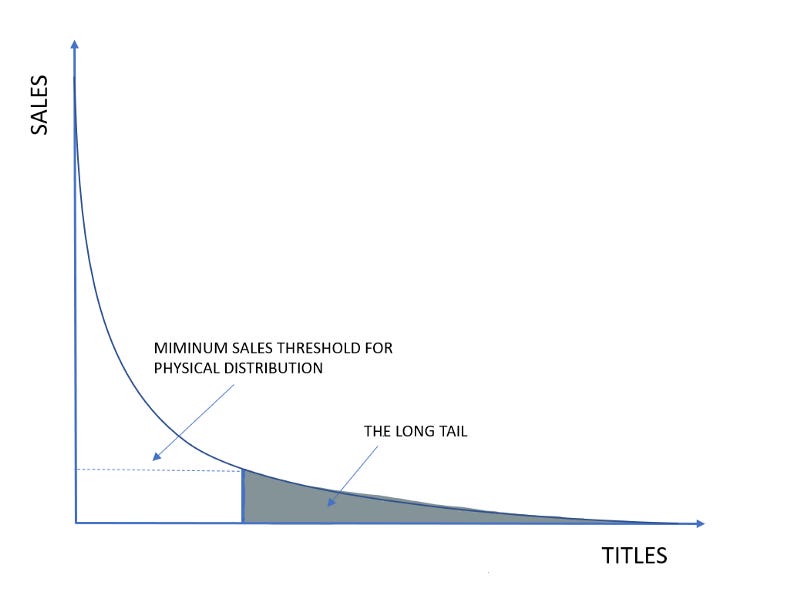

The main idea is that historically the distribution of information goods, i.e., media, was constrained by shelf space. There’s only so much space in a Barnes and Noble (or a Wal Mart or Target) and, as a result, a CD or book had to generate some minimum threshold of sales, say, annually, to justify the opportunity cost of taking up space. (The supply of local radio and TV stations and cable networks is also constrained by usable spectrum in the air or in a coaxial cable.) On the Internet, however, shelf space is effectively infinite and, therefore, the amount of content available is also effectively infinite. Anderson’s “long tail” referred to the hundreds of thousands of books, movies or songs that fell below the necessary threshold for physical distribution that could now be profitably distributed online (Figure 4).

Figure 4. The Long Tail

Source: Adapted from The Long Tail.

Anderson drew two primary conclusions from this setup: 1) the future of media is “selling more of less,” meaning that the total area under the long tail was likely to be sizable — representing anywhere from 20%-60% of total sales volume for books, songs and movies; and 2) the biggest hits, or blockbusters, are not a product of consumer preference, but a byproduct of artificial scarcity of shelf space or, as he put it, “[f]or too long we’ve been suffering the tyranny of lowest-common-denominator fare, subjected to brain-dead summer blockbusters and manufactured pop….Many of our assumptions about popular taste are actually artifacts of poor supply-and-demand matching — a market response to inefficient distribution.” The implication of the latter was that blockbusters would fade in importance.

Lessons from the Long Tail

It’s not really fair to criticize a 16-year old article. Not much in tech-world probably holds up well over that time frame. But it’s instructive to look at what the theory got right and what it missed.

Lesson 1. What it Got Right: The Tail is Real

The tail is not ’80s Ska bands or French-language documentaries, as Anderson thought. The tail is UGC, including content produced by individual (semiprofessional) creators. Forget about the 300,000 or 400,000 songs on Rhapsody that didn’t sell enough to warrant physical distribution; Soundcloud has over 200 million songs. YouTube has about 30 million channels, uploading a reported 500 hours of video per minute — or the equivalent of an entire year’s worth of original scripted TV programming every 15 minutes. That’s a tail. As Anderson intuited — to use the language of jobs theory — content in the tail is able to do all kinds of jobs that content in the head cannot: it can feel authentic in a way that mass-market content cannot; it can be different and unique and therefore feel more personal; it can be relevant to one’s social network; and it can super-serve one’s niche interests.

It’s tough to get at exactly how much time is spent in the tail, but we can triangulate on the conclusion that it’s a lot and it’s growing much faster than overall media consumption. Last quarter Facebook’s Daily Active Users (DAUs) hit almost 200 million in North America, or roughly 75% of everyone over 13 years old. Emarketer estimates that those people spend 38 minutes on Facebook per day. About half of US adults access YouTube daily too, according to the Pew Research Center. Layer in Instagram, TikTok, Snap, Pinterest, Reddit and so on, and it should easily reach two hours daily, on average, for every American adult. Corroborating the two-hour estimate: Mobile phone usage is closing in on four hours daily for the average adult in the U.S. (also according to Emarketer) — and when you pull out gaming, messaging and ecommerce, much of what is left is long-tail media consumption. Two hours might even be low. Either way, that’s significant relative to the roughly 12 hours that consumers spend with media each day (according to Activate).

Lesson 2. What it Got Wrong: Hits Still Matter, A Lot

Anderson was wrong that hits will matter less — in some cases, the biggest are bigger than ever. This part of the long tail theory has been discredited many times. We debunked it a decade ago when I was at Time Warner, in our investor presentations; in 2008, Anita Elberse wrote a book called Blockbusters that refuted it; and several other studies have shown that the biggest hits are as big, or bigger, in a digital environment, such as this one about the music industry. An intuitive reason why the big are getting bigger is that the “head” is reinforced by the “tail.” We can’t spend hours a day mucking around social media unless we have some common experiences to socialize about. And with near universal always-on connectivity, it has never been easier to figure out what everyone else is recommending or doing — and, as a result, for the most popular content to become even more popular. More scientifically, several studies have shown how the most common forms of recommendation — both social recommendation, i.e., word-of-mouth, and collaborative-filtering based recommendation engines (“people who liked this also liked…”) — amplify the most popular content. (Here’s a particularly cool study that shows the influence of social recommendation on music popularity.)

To see why, try this thought experiment. Imagine a barrel with 1,000 balls in it, each of which are marked with the numbers 1–10, and there are 100 of each. Also imagine you have 10 urns, each marked 1–10. Now randomly pick 10 balls out of the barrel and, based on the number marked on each, put each in the appropriate urn. Now replace the 10 balls you removed from the barrel with new balls, but this time the distribution of new balls will be equivalent to the distribution of balls in the urns. (If there are two balls in urn #2 and none in #3, then two of the new balls should be marked #2 and none should be marked #3.) Keep running the process, removing 10 balls from the barrel at random, placing them in the corresponding urns, and adding new balls to the barrel based on the distribution of balls in the urns. After you run this process for enough cycles, what you find is that the urns with more balls are increasingly likely to have more balls added each time. That phenomenon, alternatively called cumulative advantage, preferential attachment or the “Matthew Effect” (the rich get richer), shows why the most popular content becomes even more popular when consumer choices are influenced by what other people do, whether explicitly (by taking friends’ recommendations, checking to see “what’s trending,” reading product reviews) or implicitly (collaborative filtering recommendation engines).

In Figure 5, I show how the biggest movies have gotten even bigger over the past two decades, on an inflation-adjusted basis (box office data is the easiest to get, but I suspect TV and music look similar).

Figure 5. The Biggest Theatrical Hits are Getting Bigger

Source: Box Office Mojo, Author analysis.

Lesson 3. The Dwindling Middle

How do both the head and the tail get bigger at the same time? The middle is getting squeezed to the point where it is becoming indistinguishable from the tail. This is also pretty intuitive. When confronted with essentially unlimited choice, consumers are adopting a barbell approach, gravitating to the biggest hits, heavily influenced by brand recognition and recommendations, on the one end, or to the tail of authentic, relevant, viral, personal content, on the other. As the economics of the middle gets squeezed, the logical response will be for companies in the middle to reduce investment, which will further reduce consumption and pressure revenue, creating a vicious circle that will ultimately result in the middle effectively disappearing into the tail. What is the middle? Consider the middle any content that formerly attracted attention solely because it benefited from scarce distribution: local newspapers or TV stations with weak local coverage, radio stations without distinctive on-air personalities, middling cable networks populated with second-tier reruns or inexpensive reality programming, mid-budget me-too theatrical releases, etc.

Here’s a litmus test: consider whether if it didn’t currently exist, would anyone think it would be a good idea to invent it? If the answer is no, that’s the middle.

Figure 6 is a blown-up look at Figure 5, showing only the top 21–100 releases in each year. While the biggest hits in 2019 were bigger than prior years, from release #21 on they are all smaller than the corresponding releases in prior years. This illustrates the pressure on the middle. There’s little reason to think this won’t continue.

Figure 6. The Middle is Under Pressure

Source: Box Office Mojo, Author analysis.

Lesson 4. Aggregating the Tail Provides Massive Firepower to Attack the Head

While Anderson understood the consumer appeal of the tail, he didn’t fully appreciate the enormous power from aggregating it. As Christensen explains in Solution, when one part of a supply chain is being commoditized, another is being de-commoditized, and value shifts along the chain accordingly. (Or to paraphrase George Gilder, value creation in these cycles is defined by what’s scarce and what’s abundant.) In the old world of scarce content, curation (or aggregation) was “abundant” (how much value was created by Reader’s Digest or TV Guide?); in the current world of abundant content, curation/aggregation is scarce. It is very hard for individual creators to make money in the tail, but it has generated literally trillions of dollars of value for the companies that aggregate it: Amazon, Facebook, Google and Netflix. As a result, they have vast resources to compete vigorously for the hits in the head.

Lesson 5. Hits Still Matter, But They Have Become Even More Unpredictable

While hits matter as much or more than ever, another key implication is that where hits originate has become even more unpredictable. In reference to the movie business, William Goldman once famously said “Nobody knows anything.” Now people know even less. There is still a correlation between budget and the likelihood of producing a hit, but it’s declining. That’s partly due to the easier discoverability and virality of the best content. Blumhouse Pictures, which has consistently produced blockbusters on comparatively minuscule budgets, is one of the most vivid examples. Also, many hits now emerge from the depths of the tail. PewDiePie, Charli D’Amelio, Mr. Beast and Ninja all came out of nowhere and now compete for attention with the biggest, high-budget TV shows. Some of the biggest new music acts to break in the last few years — Post Malone, Billie Eilish, Marshmellow, XXXTentacion, The Chainsmokers, L’il Uzi Vert and many others — all emerged on Soundcloud. And international superstars, like T-Series or BTS, travel globally much more easily than they used to. Yet another reason that hits are even more unpredictable is that there is an inherent randomness to virality, as illustrated by the thought experiment with the balls and urns. The balls that happen to be selected first have a much higher likelihood of dominating; even in a hypothetical world in which all content was of equal quality there would still be massive, random hits. Just as in the financial markets, higher volatility means higher risk, and higher risk means lower returns.

Figure 7. The New Shape of Media

Source: Author analysis.

So, What is the Future of Media? Fewer, Bigger Hits, A Longer Tail, No Middle, and Lower Returns

When you add all that up, you get a couple of conclusions:

Returns for media production and distribution have to fall. In all businesses, profitability is a function of competitive intensity and competitive intensity is increasing in every media production and distribution business, largely driven by a very similar process of disruption. Some media, like TV and theatrical, have been insulated from those forces by the cost and difficulty of producing and marketing high-production value content, but that is eroding because new entrants (who happen to be some of the largest and best-capitalized companies in the world) are now fully competing at the top end of the market. At the same time, the customer definition of quality is changing to include a dizzying array of new dimensions, like ease of use, accessibility, personalization, virality, authenticity, relevance to my sub-community, interactivity and many more, none of which are the incumbents’ strong suits and for which the barriers to entry are lower than producing and marketing high-production value content. On top of that, profits in many media are still closely tied to hits and hits are becoming even more unpredictable, raising risk and exerting even more pressure on returns.

The “shape” of media consumption is changing. The new shape of media looks like Figure 7 — a taller, thinner head of fewer, bigger hits, a very long tail, and no middle. The companies that compete in the head will still find vibrant demand for their best (or most popular) content, but they will need just as many at-bats to produce fewer hits. And almost all of the companies that compete in the head still derive significant value from the dwindling middle. Companies who currently generate most or all of their value in the middle may struggle to survive. And the tail will continue to grow, mostly benefiting the curators/aggregators, not creators.

There are a couple of bright spots. Most of the bright spots fall outside the business of producing and distributing content. Lower prices (which sometimes means free), more choice and high investment in content are all shifting value to consumers; increased competition for high-quality content is shifting value to talent and rights owners (and their representatives); and the explosion of content is shifting value to curators/aggregators who have established a vital role in the supply chain and can siphon off rents as a result. It’s a good time to be a TV viewer, Shonda Rhimes or YouTube. Some niche producers will figure out how to capitalize on the democratization of hits, like Blumhouse or FaZe Clan. There is also a potentially large opportunity for marketplaces or tools that enable creators to better monetize the tail, like Patreon and Substack. Among traditional producers and distributors of media, the “bright spots” are relative. There is still a lot of value to extract from those media that will decline relatively slowly, such as those that produce the highest production value content (like the major movie studios) or benefit from high levels of consumer inertia (like pay TV networks). But they’ll still decline. And the very strongest brands, like Disney and The New York Times, may be able to take share of a shrinking pool of profits.

In the capital markets, fortunes are won or lost by understanding both speed and direction. Speed’s tough to guess. But the direction seems clear.

References

Anderson, C. (2004, October 1). The long tail. WIRED.

Anderson, C. (2006). The long tail: Why the future of business is selling less of more. Hachette Books.

Bower, J. L., & Christensen, C. M. (1995, January 1). Disruptive technologies: Catching the wave. Harvard Business Review.

Christensen, C. M. (1997). The innovator’s dilemma: When new technologies cause great firms to fail. Harvard Business Press.

Christensen, C. M., Hall, T., Dillon, K., & Duncan, D. S. (2016). Competing against luck: The story of innovation and customer choice. HarperCollins.

Christensen, C. M., & Raynor, M. E. (2003). The innovator’s solution: Creating and sustaining successful growth. Harvard Business Press.

Elberse, A. (2013). Blockbusters: Hit-making, risk-taking, and the big business of entertainment. Henry Holt and Company.

Salganik, M. J., Dodds, P. S., & Watts, D. J. (2006, February 10). Experimental study of inequality and unpredictability in an artificial cultural market. Science.