Infinite Content: Chapter 3

The Internet Did It: The Last Great Disruption of Media

This is the draft third chapter of my book, Infinite Content: AI, The Next Great Disruption of Media, and How to Navigate What’s Coming, due to be published by The MIT Press in 2026. The introductory chapter is available for free here. Subsequent draft chapters will be serialized for paid subscribers to The Mediator and can be found here.

In 1993, I moved to New York City to work as a junior analyst on Wall Street. Part of my job was to stay on top of any news that might affect the prices of stocks we covered. Every morning, I picked up The Wall Street Journal on my walk to the subway. I didn’t download it or open the app. I didn’t have a phone. Also, there was no app. I bought a physical newspaper.

It’s difficult to read a broadsheet while shoehorned into a narrow subway seat—or, even tougher, standing, with one hand gripping a handrail—but I observed my fellow riders’ technique: fold the newspaper in half vertically, so that you can read it one-half page-width at a time, with only one hand, and not invade anyone else’s personal space (a lost art, by the way).

The first thing I’d do was flip to the index and scan it, to see whether Disney, Comcast, Time Warner, Viacom, Blockbuster, Polygram, News Corp., New Line, Turner Broadcasting, Tele-Communications, Inc. (TCI), Cox or any other relevant public company was mentioned. The Journal was essentially the source of financial news and it was printed once daily. I had a computer at my desk, but it was only connected to local servers and printers. (The printer was next to the fax.) There was no consumer internet to speak of anyway; the Mosaic browser had just been released and there were few sites to visit. If important news broke during the day, I would get a frantic call from the trading desk that a story was “on the wire,” meaning one of the wire services, AP or Reuters. (They ran on private networks.)

At the time, I was staying for a couple of months at a family friend’s apartment, but I had to find my own place. On Sundays, The New York Times published a separate Real Estate section (as it still does), which included the most extensive real estate classifieds of the week. I would wait for the Sunday paper to come out, spread out the section on the kitchen counter, pen in hand, and scan through the listings, column-by-column, circling as I went, especially attuned to anything new. The ads were all text, usually heavily abbreviated to save space and cost (e.g., a two-bedroom, one-and-a-half-bath with an eat-in-kitchen and air conditioning might be abbreviated to “2BR/1.5 BA w/EIC & AC”). To get any sense of what an apartment looked like, I had to make an appointment and go check it out.

Whether 30 years ago sounds like a long time ago or not depends on your perspective. To me, it doesn’t. Yet, the picture I just painted sounds comically archaic. To say that the way we receive information—and the role of newspapers—has changed radically doesn’t capture it.

Today, nearly infinite information is available at our fingertips, literally, at all times of the day and night. It is pushed, searchable, customizable by us and tailored for us by unseen forces: increasingly sophisticated algorithms that monitor our behavior and predict our preferences, some of which we aren’t even explicitly aware. It is multimodal, delivered via text, a combination of text and images, audio or video. It is created by professional producers (working for multinational corporations), amateurs next door or halfway around the world (working for free), and everyone in between.

The newspaper business still exists, but the term “newspaper” has become anachronistic, as very little news is delivered on paper. And, not surprisingly, the newspaper business has been decimated.1 In 2000, average daily circulation was almost 56 million U.S. adults and industry revenue peaked at $59 billion. By 2020, circulation (both physical and digital) had fallen by half and revenue had declined to about $20 billion, down by 2/3. Over that same time, about 1/3 of newspapers have closed. Today, much of the discussion in the newspaper business is whether it should be a business at all or whether its salvation lay in treating news as a non-for-profit undertaking.

If you asked someone to condense this course of events into a few words, they might look at you like you were a little daft and say “newspapers were disrupted by the internet.” Duh. But what exactly does that mean? What’s the internet? How did it disrupt newspapers—or magazines, music, DVDs, or cable, for that matter? In this chapter, we’ll try to answer those questions. Some of it will be a little technical, but my goals are to: 1) provide an intuitive understanding of how the internet changed the underlying architecture of media; 2) lay the foundation for analyzing the implications of those changes over the next few chapters—what I refer to as the “tectonic trends” affecting media today; and 3) introduce some technical concepts that will be important for our discussions about GenAI in the latter half of the book.

But to understand the importance of the internet, we need to step back to long before the World Wide Web was a twinkle in Tim Berners-Lee’s eye. We need to begin with digitization.

Our Analog World

All media are representations of sound, images and/or text. In nature, sound and light waves occur over a continuous range of values. (Text is a special case. It doesn’t occur in nature, since it’s an abstraction of language, which is in turn an abstraction of human thought.)

Let’s take sound, to make it more concrete. Sound occurs when something happens that makes an object vibrate, like vocal cords or a string. That creates a pressure wave—a continuous signal—that moves through the air. If it is powerful enough, produces vibrations in the right frequency range and reaches a receiver, like ear drums or a microphone, it produces sound.2 (Humans can generally hear tones between a frequency range of 20 cycles per second, or Hertz (Hz), and 20,000 Hz.)

Last chapter, we got a bit ahead of ourselves. I described the economic and business model implications of media being digital. But it wasn’t always that way. Prior to the commercialization of digital technologies, to reproduce sounds and images, most media had to also replicate this continuous range of values. Media was analog, so called because it was analogous to the source signal. Let’s go back, say, to the 1970s, to explore what this meant.

Sound was recorded by replicating a waveform on a physical medium, like by etching the pattern on vinyl or magnetizing iron oxide particles on a cassette or reel-to-reel tape. Images were recorded by exposing light-sensitive paper to light, capturing the full range of tone (and eventually) color of the original image. Text was printed as characters on paper.

Before digitization and the internet, media distribution was siloed.

So, in 1975, the smallest parts—the atomic units—of each medium were different. For a song, it was the note or maybe a waveform; for a film it was a still frame or perhaps the individual grains on the film; for text it was the letter or punctuation mark. There was no common language between them. That meant that an innovation in one had no effect on other media. For instance, when recorded music transitioned from vinyl to 8-track cassettes in the late ‘60s-early ‘70s, it didn’t matter much for book publishing, newspapers or TV.

In addition, owing in part to the differences in physical media and the way in which they are used, each medium had (and, to this day, still has) a distinct physical distribution infrastructure. Movies were watched in movie theaters, projected on big screens by specialized cinema projectors. Books, records and VHS tapes were distributed like other packaged goods, through local distributors, rack jobbers, and, ultimately, (“brick and mortar”) retailers. Newspapers are far more ephemeral and time sensitive than most other media, so they require a highly specialized network of local distributors to get them to newsstands and people’s homes every day. Radio and TV were both broadcast over radio frequency spectrum, but the frequencies and amount of spectrum they used was tailored to their specific characteristics. (Radio requires less bandwidth and uses lower frequencies to enable long ranges; TV requires a lot more bandwidth and tends to trade off higher fidelity for a shorter range.)

Back then, the form factors over which people consumed different media were all obviously different too. You watched TV on a TV, listened to the radio on a radio, read a novel as a hardcover or softcover book, read a newspaper printed on flimsy newsprint, flipped a magazine and so on.

In other words, for the most part, different media operated in very distinct, parallel silos: different atomic units, different distribution networks, different consumer form factors. That would change.

The Big Bang: Digitization

In 1948, Claude Shannon was toiling away in relative obscurity at Bell Labs in the West Village when he wrote “A Mathematical Theory of Communications” for the Bell System Technical Journal. The paper launched the field of Information Theory and the concept of the “bit,” or binary digit, the 0s and 1s that are the underpinning of all modern communications. The concept of representing continuous analog signals with discrete symbols has a rich history, including George Boole’s development of Boolean algebra (a binary logic system) in the 1850s and Harry Nyquist’s work in the 1920s on digital sampling. (For an entertaining and very thorough account, I recommend The Information: A History, A Theory, A Flood, by James Gleick.) But Shannon formalized the idea that an analog signal could be replicated using binary code, or what we now call “digitized.”

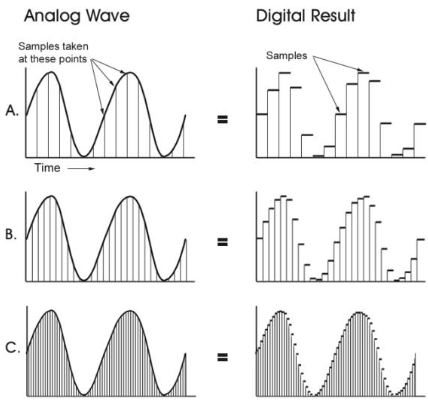

Digitization involves sampling a continuous sound wave or image at regular intervals, “quantizing” it and converting that quantity to binary code, comprising bits. As shown in Figure 12, the idea is that the more frequent the sampling, the closer it approximates the original signal.

Figure 12. Increasing Sampling Rates

Source: Izotope (https://www.izotope.com/en/learn/digital-audio-basics-sample-rate-and-bit-depth.html).

In the case of text, each letter is converted to a series of bits. English letters, for example, are encoded into bits using ASCII (American Standard for Information Interchange), which represents each letter using seven bits. (A is represented by 1000001, B is 1000010, C is 1000011 and so on.)

Images are also converted to bits, in a more complicated way. (Since it will be relevant for our later discussions about GenAI, I’ll go into a bit of detail.) Every image comprises some number of pixels, depending on the resolution (e.g., 1080 x 1080 = 1.2 million pixels; 1920 x 1080 = 2.1 million pixels, and so on). Usually, each pixel has three color channels, red, green and blue (R,G,B) and each color channel has a corresponding value, ranging from 0 (none) to 255 (full intensity). So, every pixel is described by three values. A black pixel is (0,0,0), a pure red pixel is (255,0,0) and a white pixel is (255,255,255). (There are 256 x 256 x 256 possible combinations, or 16.8 million colors.) Since each color value can range from 0-255, each can be represented by 8 bits (28 = 256). And since each pixel has three color values, each pixel requires 24 bits (8 + 8 + 8). Anyway: still bits.

To hammer home the key point, it is now possible to encode all information—sounds, images (moving or not) and text—the same way. So, while in an analog world, there was no common language among media, digitization changed that. It made the atomic unit of all media the same: the bit.

Throughout the 1980s-1990s, media shifted from analog to digital: music transitioned from vinyl and cassettes to CDs; video moved from VHS to DVD; digital creation hardware and software started to emerge, like MIDI and digital synthesizers, digital cameras and video editing; cable systems started to upgrade to digital signals and digital set-top boxes to decode them, etc.

This transition had real benefits. It increased fidelity and durability, because digital formats don’t degrade the same way as analog (there is no tape hiss or film scratches). It made it more efficient to store media, because it could be compressed. And it made it easier to edit, remix, enhance, and replay media, because it could be accessed non-linearly (you could shuffle an album, for instance, rather than listen in order). All that is good. But perhaps not revolutionary.

The most important feature of digitization: bits have universality. They are the standard unit for representing, processing, storing and transporting all digital information, regardless of the source, application, device, medium or communications network.

Far more important, bits have universality, in the sense that they are the standard unit for representing, processing, storing and transporting all digital information, regardless of the source, application, device, medium or communications network.

For media, it is hard to overstate the significance of this change. This standardization of all media was the Big Bang that paved the way for all innovation that has followed since, including what we colloquially, collectively, call “the internet.”

Keep reading with a 7-day free trial

Subscribe to The Mediator to keep reading this post and get 7 days of free access to the full post archives.