Is GenAI a Sustaining or Disruptive Innovation in Hollywood?

Progressive Syntheticization vs. Progressive Control

There is a lot of debate and confusion in Hollywood about the likely impact of GenAI on the TV and film production process and cost structure. (For reference, I’ve written a lot about the topic, including Forget Peak TV, Here Comes Infinite TV, How Will the “Disruption” of Hollywood Play Out?, AI Use Cases in Hollywood and What is Scarce When Quality is Abundant.)

Some believe that the effects will be relatively marginal. They reason that it may increase efficiency and reduce costs, but as was the case for innovations like DSLRs or CGI, these savings will mostly end up as higher production values on screen. Others think it will be transformative. The AI-video corner of Twitter/X (AIvidtwit?) includes plenty of posts declaring that “Hollywood is dead” and the impact of AI on jobs was, of course, a central issue in the recent WGA and SAG-AFTRA strikes.

Another way of framing this split is that the former camp is viewing GenAI as a sustaining innovation and the latter as a disruptive innovation. So, which is it? The answer is that it can be both, depending on how it is used.

The application of GenAI to TV and film production is fundamentally about “syntheticization,” replacing physical and labor-intensive elements of the production process—sets, locations, vehicles, lighting, cameras, costumes, make-up and people, both in front and behind the camera—with synthetic elements, made by a computer. This is much more efficient, but it requires a tradeoff with quality control. Each pixel created by a computer delegates some human oversight and judgment to AI.

Opinions in Hollywood about GenAI are polarized partly because syntheticization is happening from two different directions, reflecting opposing approaches to the tradeoff between efficiency and quality control. One approach is what we can call “progressive syntheticization:” systematically incorporating GenAI into existing production processes. The other is what we can call “progressive control:” starting out entirely synthetic and systematically increasing creator control. The former is a sustaining innovation, the latter is disruptive. Like in Rashomon, different perspectives yield different conclusions.

In this essay, I explore the distinctions between these approaches and the implications.

Tl;dr:

GenAI can be either a sustaining or a disruptive innovation, depending on how it is used. Whether you think it is the former or the latter depends on who you are and where you look.

Many traditional studios, both majors and independents, are pursuing “progressive syntheticization” and incrementally incorporating GenAI tools into existing workflows. Like most incumbents, they view technology as a tool to improve the cost and/or quality of existing products and processes, the definition of a sustaining innovation.

At the same time, AI video generators, such as Runway, Pika and Stable Video Diffusion, have started off as entirely synthetic, with limited creator control and are providing more control over time (“progressive control”). Not surprisingly, creators outside the traditional Hollywood system are the most excited about these tools.

The initial output of these tools was almost a joke —surreal, disturbing and generally unwatchable (in the language of disruption theory, clearly not “good enough”). But they are improving at a startling pace and creators are developing custom workflows using multiple tools to get even more control and better output.

This is the definition of a disruptive innovation: something that starts out as an inferior product, gets a foothold, and then gets progressively better.

When a technology can either a sustaining or disruptive innovations, the sustaining use cases usually go mainstream first. So, the first mainstream commercial applications of GenAI may not move the needle much.

In parallel, the AI video generators will keep getting better until they cross the threshold of “good enough,” for a sufficient number of consumers, for certain content genres.

GenAI may look like a sustaining innovation—until it suddenly doesn’t. It will be easy to be lulled into complacency, if you’re looking in the wrong place.

The Same Technology Can be a Sustaining or Disruptive Innovation

In his theory of disruptive innovation, Clay Christensen distinguished between sustaining and disruptive innovations (Figure 1). From The Innovator’s Solution:

A sustaining innovation…[enables incumbents to make] a better product that they can sell for higher profit margins to their best customers.

Disruptive innovations, in contrast…disrupt and redefine…[markets] by introducing products and services that are not as good as currently available products […]. Once the disruptive product gains a foothold in new or low-end markets…the previously not-good-enough technology eventually improves enough to intersect with the needs of more demanding customers.

Figure 1. Sustaining vs Disruptive Innovations

Source: Author, adapted from The Innovator’s Dilemma.

A key point here is that disruptive innovations always start as not “good enough”—or as Chris Dixon puts it, “the next big thing always starts out being dismissed as a ‘toy.’” They are always cheaper to implement, but the initial tradeoff for low cost is lower performance (or quality) than the incumbents’ products or services.

Another important point about this distinction, often overlooked, is that the same technology can be a sustaining or disruptive innovation, depending on the way it is applied. Usually, people think of technologies or business models as being inherently either sustaining or disruptive. The fifth blade on a razor is sustaining; a D2C subscription for razor blades is disruptive. But that’s not always the case.

The same technology can be a sustaining or disruptive innovation, depending on the way it is applied.

Let’s take the application of “digital technology” to the music business as an example. In nature, sound occurs as an analog waveform. Analog media like vinyl records or cassette tapes replicate these waves through physical alterations to the media. Instead, digitization converts a representation of the analog signal to bits.

One of the first applications of digital technology in music was the creation of CDs, which was a sustaining innovation. Relative to vinyl or cassettes, CDs offered better sound quality (less background noise), greater durability and easier control over playback. Music labels and retailers marketed them as an improvement and sold them at higher prices. Another application of digital technology was the creation of the MP3 format and the transport of those files over networks, which was a disruptive innovation. These files were often lower quality (or sometimes corrupted or mislabeled) and passed around for free on peer-to-peer file sharing networks. The rest is history.

When a technology is applied in both a sustaining and disruptive way, the sustaining application usually goes mainstream first.

There are many other examples of a single technology used in both a sustaining and disruptive way. Since the sustaining approach is easier to implement and the disruptive approach initially produces an inferior product that doesn’t serve most customers, the sustaining application usually goes mainstream first. Cloud computing was initially an extension of data center hosting but ultimately paved the way for the “hyperscalers.” Or, consider 3D printing, which was initially used to speed prototyping as a part of traditional manufacturing processes but eventually replaced some forms of manufacturing altogether.

In TV and film production, GenAI is also being approached as both a sustaining innovation—“progressive syntheticization”—and a disruptive innovation—“progressive control.”

Progressive Syntheticization

Many incumbent studios, both majors and independents, are looking to systematically incorporate GenAI into existing production workflows (progressive syntheticization). This continues a tradition as old as filmmaking itself, long before the advent of AI. It’s a sustaining innovation because it aims to improve the cost and/or quality of existing products and processes.

TV and Film Production Has Been Getting More Synthetic for Decades

All fictional TV and filmmaking is partially an effort to make audiences suspend their disbelief and pretend that something that clearly didn’t happen, actually happened. Toward this end, virtually since the inception of film, filmmakers have been combining synthetic and live action elements to create a sufficiently believable illusion. From AI Use Cases in Hollywood:

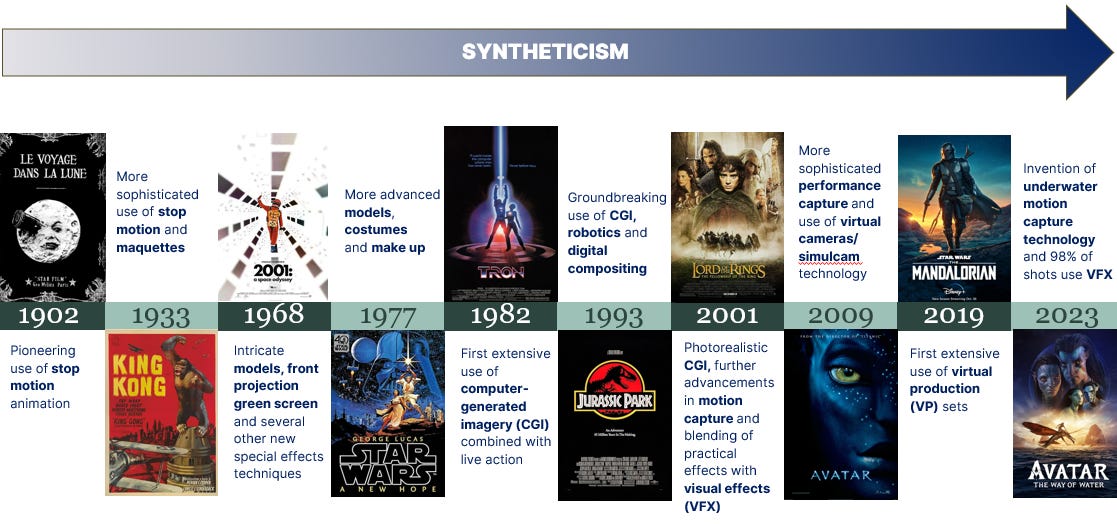

You can draw a line from George Méliès using stop motion animation in A Trip to the Moon (1902) to the intricate sets in Fritz Lang’s Metropolis (1927) to the maquettes in King Kong (1933) to the even more sophisticated models, costumes and make up in Star Wars (1977) to the first CGI in TRON (1982) and the continuing evolution of computer graphics and VFX in Jurassic Park (1993), the Lord of the Rings trilogy (2001) and Avatar (2009), to where we are today. Every step has become more divorced from reality…[T]oday almost every mainstream film has some VFX and, in a film like Avatar 2: Way of Water, almost every frame has been heavily altered and manipulated digitally.

This history of syntheticization is pictured in Figure 2. Note that, until the advent of CGI in the early 1980s, most of the innovation in syntheticization consisted of adding synthetic physical elements (maquettes, prosthetics, physical special effects, etc.); after that, most of it consisted of adding synthetic virtual elements, created on a computer.

Figure 2: The History of Filmmaking as a Process of Syntheticization

Source: Author.

Progressive Syntheticization is a Sustaining Innovation

The goal of progressive syntheticization is to reduce the costs and time required of the existing production process, but in a way that doesn’t adversely affect the viewer experience. Like most sustaining innovations, it seeks to improve the cost/quality (or cost/performance) tradeoff of existing products.

In AI Use Cases in Hollywood, I detailed how this will work in practice (Figure 3). Current/near-term applications include using:

Text-to-image tools like Midjourney or DALL-E to quickly create concept art like storyboards and animatics, such as this example from Chad Nelson.

ChatGPT or purpose-built LLM wrappers to help with script development and editing.

Text-to-3D and NeRF/Gaussian Splatting tools, like those from Luma Labs, to develop 3D assets for previs or even production.

Purpose built text-to-image generators like Cuebric or Blockade Labs to develop virtual production backgrounds.

Tools like Vanity AI, Wonder Dynamics and Metaphysic to automate and improve certain VFX processes.

Tools like Flawless or Deepdub to automate localization services (“dubbing and subbing”) and enable digital re-shoots.

Future foreseeable applications include further virtualization of physical elements. For instance, it should be possible to lean more heavily on digital costumes and make-up. Face swapping technology may enable the use of “acting doubles” and obviate the need for A-listers to show up on set, at least some of the time. More extensive use of AI-generated digital assets may enable the use of simpler, less expensive sets. Once Adobe embeds its Firefly GenAI tools into Premiere Pro, it promises to auto-create simple storyboards just by reading the script and even take a first pass edit by synching footage with the screenplay or audio.

Figure 3. Current and Future AI Use Cases in TV and Film Production

Source: Author.

Progressive Control

The other end of the spectrum are tools that are entirely synthetic and prioritize efficiency over creator control, namely the AI video generators, like Runway Gen-2, Pika 1.0, the open source Stable Video Diffusion and yet-to-be-released tools like Google’s Imagen and Meta’s Emu. (In fact, at first creators’ only lever to control the output was the prompt itself.) Not surprisingly, many incumbents are dismissive of these tools and creators who are outside the traditional Hollywood system—who have the most to gain and the least to lose—are the most enthusiastic.

Like other disruptive innovations, the initial quality of these tools is clearly not “good enough.” Early clips produced some grotesque results and, even now, they have challenges with temporal consistency between frames, motion and synching speech and lips. You would not pop a bag of popcorn and sit down and watch a movie entirely created in Gen-2. But these products are evolving at a lightning pace, giving creators increasingly more control and producing much better output. Creators are also developing custom workflows using multiple tools to exert even more granular control and produce even better results.

Like all disruptive innovations, the AI video generators are not good enough yet — but it is important to understand how quickly these tools are evolving.

Figure 4. Runway Gen-2 Product Development Over Last Six Months

Source: Author.

Runway’s Product Development Over Just Six Months

Let’s take Runway Gen-2 as an example (Figure 4).

It launched as a text-to-video generator in June and was, at that time, basically a slot machine. You would enter in a text string, “pull the handle,” and see what you got. Since then, Runway has been progressively giving creators more control and improving the quality of the output.

In July, it enabled creators to upload a reference image (image-to-video). Users could iterate through prompts on the native text-to-image generation tool within Gen-2 or use other text-to-image generators, like Midjourney or DALL-E.

In August, it added a slider to control motion of the output video.

In September and October it rolled out Director Mode, which enables panning and zooming.

Last month it added Motion Brush, which enables creators to isolate and add motion to specific elements of the footage.

Throughout, it has been improving temporal consistency from frame-to-frame and the realism of motion.

To give a sense of this progress over just six months, here are a few videos created using Gen-2. The first is from July. It is a pretty disturbing trailer for a hypothetical Heidi movie. Not recommended right before bed.

By contrast, below is the winner from Runway’s Gen:48 short film competition from last month. The film cleverly features characters in helmets to overcome the current challenges of synching speech and lip movements. But the temporal consistency is much better.

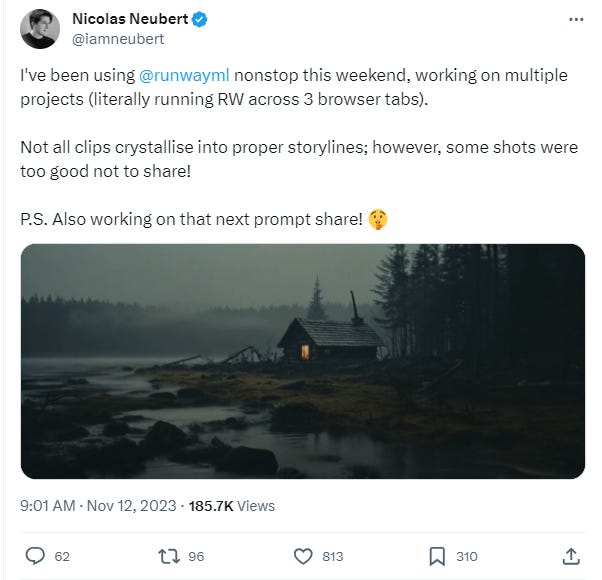

Or, check out this short video (link embedded in the tweet image), also made with Runway. As you’ll see, motion still looks a little awkward and the humans don’t speak. But the people and the imagery are pretty stunning.

Runway’s stated north star is to enable the creation of a watchable 2-hour feature film. Based on its current trajectory, this seems attainable in the next few years. Temporal consistency and motion will continue to get better, it will add more granular creator control and it will inevitably sync speech with lip movement.

Multi-Tool Workflows

Beyond the inevitable improvement in Runway, Pika and open source models, creators are increasingly adopting workflows that combine multiple tools. For instance, here is a re-imagining of Harry Potter as a Pixar movie. (An observation as a non-lawyer: This obviously uses copyrighted material, but presuming the creator isn’t monetizing the video, it probably falls under fair use.) It used Midjourney and DALL-E for the reference images, Firefly in Photoshop for fine-tuning them, Runway to animate the images and D_ID to make the characters’ dialog look believable.

Or, check out The Cold Call, which uses Midjourney for the reference images (the main characters bear striking resemblances to Tom Hardy and Mahershala Ali), both Runway and Pika to animate them and Wav2Lip to synch the voices with lips. This is obviously easily distinguishable from live action footage, but it’s a quantum leap from the Heidi video.

For one more, here is One of Us, which also likely uses a combination of Midjourney, Runway, Pika and Eleven Labs (to create voices). It also cleverly uses the child’s perspective to avoid requiring voices and lips to synch.

The Arc is Toward More Granular Control

It’s hard to overstate how quickly all this is moving. It’s tough to stay on top of the pace of development in AI, even if it’s your full time job. If you check out futuretools.io, for instance, it shows over 200 text-to-video, video editing, generative video and image improvement tools (Figure 5). But the clear arc of all these tools is toward ever-increasing fidelity and more granular creator control. Consider what’s emerged over just the last few weeks.

Figure 5. The Number of AI Video Tools is Overwhelming

Although Pika launched it’s first product about six months ago, last month it unveiled Pika 1.0 with the video in the tweet/post embedded below (link embedded in the tweet image). Perhaps the most remarkable part comes at the 0:43 mark: Pika 1.0 makes it possible to highlight specific elements in the image and change them on the fly with a text prompt (as opposed to taking the time to test and re-render video using multiple prompts).

Meta recently teased advancements in Emu Edit and Emu Video, text-to-image and video generation models that will also make it possible to edit specific footage elements in real time, with simple text instructions.

Stability.ai just released SDXL Turbo, an open source real-time text-to-image generation tool. The output may be lower quality than Midjourney, but the ability to generate ideas so quickly makes it more practical for creating precise concept art for use as reference images in image-to-video generators.

Tools like Vizcom make it possible to convert a sketch and a text prompt into a photorealistic rendering. Similarly, Krea.ai uses a technology called Latent Consistency to enable AI-assisted real-time drawing, as shown below. With just a text prompt and very crude drawing skills, a creator can make and refine whatever she can imagine. These kinds of tools provide creators even more granular control of the reference images they use for video generation.

The Effect of GenAI May Seem Marginal— Until it Suddenly Doesn’t

So, which is it? Will GenAI be “just another tool” and have a marginal impact on the TV and film business or will it transform it?

As is usually the case, the answer probably lies at neither extreme, but I lean more heavily toward the latter. I’ve laid out the reasons elsewhere (like Forget Peak TV, Here Comes Infinite TV, How Will the “Disruption” of Hollywood Play Out?, AI Use Cases in Hollywood and What is Scarce When Quality is Abundant), but the idea is that GenAI will lower the barriers to entry to produce quality video content, even as the consumer definition of quality is shifting to de-emphasize high production values. Always is a dangerous word, but I will stick my neck out: lower barriers to entry are always disruptive for incumbents. (If you have counter examples, let me know!)

It is important to recognize that the first mainstream use cases of GenAI will probably be sustaining innovations, not disruptive innovations.

To reinforce a point I made above, however, it is important to recognize that the first mainstream use cases of GenAI will probably be sustaining innovations, not disruptive innovations (like faster creation of concept art in pre-production, more efficient VFX work or more convincing de-aging and dubbing). These may yield some cost savings or improve production values, but they probably won’t move the needle much.

It will be easy to be lulled into complacency and underestimate the potential effect, if you’re looking in the wrong place.

At the same time, however, the progressive control approach will keep getting better. At some point, likely within 5 years, AI video generators will surpass the “good enough” threshold for certain genres, for a sufficient number of consumers (Figure 6), empowering the massive independent creator class. In other words, it will probably look marginal, until it doesn’t.

It will be easy to be lulled into complacency and underestimate the implications, if you’re looking in the wrong place.

Figure 6. GenAI May Seem Like a Sustaining Innovation, Until it Doesn’t

Source: Author, adapted from The Innovator’s Dilemma.

A part of me wonders if something like the black list can help with film production and AI as well but also have more well made films and less films that are made to fill time slots.

Excellent rundown! Some great AI films here too. Some of the biggest quality upgrades come from clever creative choices to avoid the shortcomings of the tech. Great piece!