Forget Peak TV, Here Comes Infinite TV

The Four Technologies Lowering the Barriers to Quality Video Content Creation

[Note that this essay was originally published on Medium]

I recently posted an essay called The Four Horsemen of the TV Apocalypse. I got a lot of feedback that the piece raised important ideas, but also that, at >10,000 words, many would be put off by the time commitment required. This is an attempt to convey the same ideas in a shorter version.

Tl;dr:

The growing realization that streaming TV is less profitable than the declining traditional TV business is causing ripple effects along the entire entertainment value chain. Disney CEO Bob Iger recently called it “an age of great anxiety.”

One notable thing about all this angst is that it has been caused by disruption of only one part of the value chain. Over the last decade, the barriers to distribute video content have plummeted, but the barriers to create TV series and films have risen dramatically. It’s expensive and risky and consequently is still dominated by only a handful of big entertainment and tech companies.

This essay makes the case that, over the next decade, quality video content creation is on a path to be disrupted too. The question is not whether we have achieved “peak TV,” but what happens when we have “infinite TV?”

Short form video, namely YouTube and TikTok, is already effectively infinite. But entertainment companies, “creators” and consumers largely think of this as distinct from TV series and movies, with a far lower quality and very different use cases.

Below, I discuss four technologies that, collectively, could increasingly blur these distinctions over the next 5–10 years, resulting in “infinite” quality video content. Several are early, but they are not theoretical. They are all happening now.

Short form video is changing some consumers’ definition of quality in a way that de-emphasizes the importance of high production values, lowering the barrier to entry; the hand-in-glove technologies virtual production and AI are on a path to democratize high production value content creation tools; and web3 has the potential to dramatically broaden access to capital.

I am not making a value judgment about these trends, especially AI, which is deeply unsettling to many, or discussing their potential effect on employment, which could be meaningful. They are progressing whether one thinks they are good or bad.

The surprisingly far-reaching implications of the disruption of video distribution over the past decade show how hard it is to predict the implications of a similar disruption of content creation. But exploring even obvious first order effects suggest that the changes in the entertainment business in the next decade could be more profound than what occurred over the prior one.

A Very Brief Recent History of TV: Video Distribution Has Been Disrupted, High Quality Video Content Creation Has Not

Anyone who follows the TV business knows that it is currently struggling with the transition from highly-profitable traditional pay TV to far less profitable streaming (see here, here and here). The ripple effects are felt everywhere along the value chain: talent, sports leagues, broadcast and cable networks, theaters, stations, agencies, advertisers, pay TV distributors, you name it.

Even if you follow it closely, it’s easy to lose sight of how we got to this point. The root cause is that TV distribution was disrupted. In The Four Horsemen, I explain in detail how TV distribution is a textbook example of Clayton Christensen’s disruption process.

As the barriers to distribute video have fallen over the last decade or so, however, the barriers to create high quality content have risen. The chief expenses are talent, both behind and in front of the camera, special/visual effects and marketing. With the entrance of Netflix, Amazon and Apple, those costs have increased, both because of increased bidding to attract a finite pool of talent and an arms race to put ever-higher quality on screen.

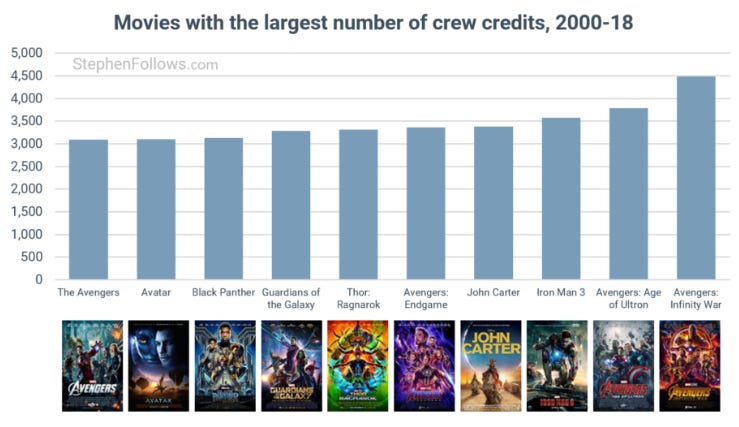

Ten years ago, production costs for the average hour-long cable drama were about $3–4 million. Today it is common to see dramas exceed $15 million per episode (Figure 1). Any guess how many people it takes to make a big, special/visual effects-laden movie? As shown in this great analysis by Stephen Follows of IMDb credits from 2000–2018, Avengers: Infinity War had the most, almost 4,500 people (Figure 2). Avatar: The Way of Water is probably higher than that.

Figure 1. Many TV Series Now Exceed $15 million Per Episode in Production Costs

Source: Stacker.com

Figure 2. The Most Labor Intensive Movies Employ Thousands of People

Source: Stephen Follows.

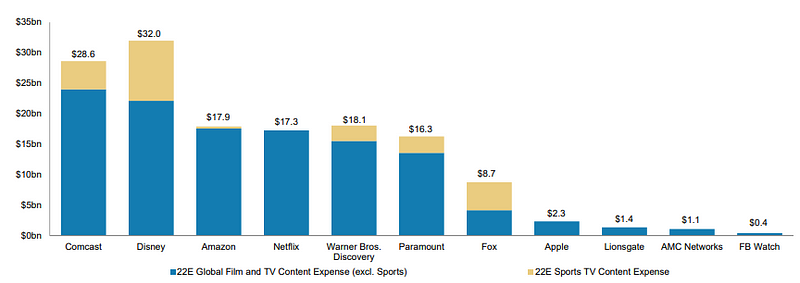

The high cost and high risk of quality content creates a moat.

Producing content is also very risky, because returns are highly variable and almost all expenses are front loaded. Only large companies with strong balance sheets and a large portfolio of projects can manage this risk. As a result, TV and film production spending is still dominated by just a handful of companies. Figure 3 shows Morgan Stanley’s estimates for 2022 content spend from the largest spenders. Although the estimates may be somewhat dated, the point is that this list looks little changed from five or even ten years ago, other than the addition of Amazon and Netflix and a couple of mergers. Disney, Comcast (NBCU), Warner Bros. Discovery and Paramount are still at the top of the list.

Figure 3. Seven Companies Still Dominate Global Video Content Spend

Source: Morgan Stanley Technology, Media and Telecom Teach In, May 2022.

Forget Peak TV, What are the Implications of Infinite TV?

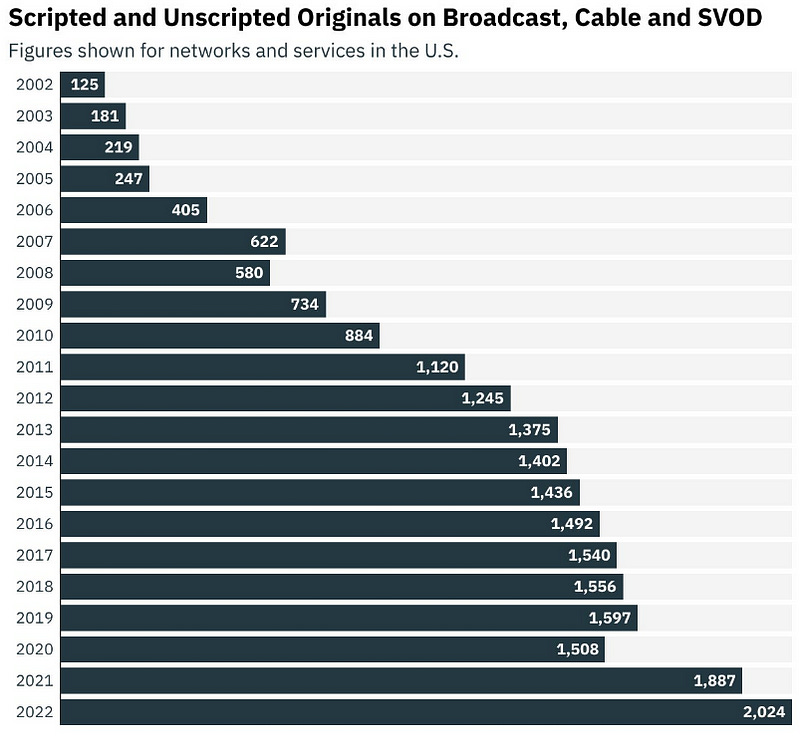

John Landgraf, Chairman of FX Networks, coined the phrase “peak TV” to describe the explosion of original programming on cable networks and streaming services over the last decade (Figure 4).

Figure 4. Original Programming Has Almost Doubled in the Last Decade

Source: Variety Insight by Luminate, Variety Intelligent Platform analysis.

Not surprisingly, there is growing evidence that TV has, finally, peaked. The question I raise in this essay, however, is whether focusing on the content arms race between Netflix, Disney, Comcast, et. al. is too narrow a view. (It is a little like debating whether Cersei or Daenerys will prevail as the army of the dead amasses at the Wall.) What if the arrival of “peak TV” is a temporary breather before the onslaught of “infinite TV?”

What’s infinite TV? First, let’s establish some nomenclature. Although it’s flawed, for convenience, I’ll refer to professionally-produced, Hollywood establishment content as “long form” and user generated or creator content as “short form.” Short form is effectively already “infinite.” YouTube has 2.6 billion global users and ~100 million channels that upload 30,000 hours of content every hour. That is equivalent to Netflix’s entire domestic content library — every hour. TikTok has 1.8 billion users. And while we don’t know how many hours of content are on TikTok, 83% of its users also upload content.

Infinite TV describes the blurring distinction between professionally-produced (“long form”) and independent/creator/UGC (“short form”) content, as consumer standards fall, high production value tools are democratized and financing becomes more broadly accessible.

Despite the almost unfathomable enormity of short form, most don’t consider it a threat to Hollywood. The entertainment companies, most consumers and even independent “creators” themselves consider it a different thing, of a lower quality and with different use cases. This view is supported by the usage data. Consulting firm Activate estimates that TV viewing (defined as traditional plus streaming of professionally-produced content) by adults 18+ hasn’t changed much over the last few years despite the growth of short form (what it refers to in the charts as “social video”). It also forecasts long form viewing won’t change much in the next few even as short form continues to grow (Figures 5 and 6).

Figure 5. Viewing of Long Form Video Has Remained Flat…

1. Figures do not sum due to rounding. 2. “Digital video” is defined as video watched on a mobile phone, tablet, desktop/ laptop, or Connected TV. Connected TVs are TV sets that can connect to the internet through built-in internet capabilities (i.e. Smart TVs) or through another device such as a streaming device (e.g. Amazon Fire TV, Apple TV, Google Chromecast, Roku), game console, or Blu-ray player. Does not include social video. 3. “Television” is defined as traditional live and time shifted (e.g. DVR) television viewing. Sources: Activate analysis, eMarketer, GWI, Nielsen, Pew Research Center, U.S. Bureau of Labor Statistics.

Figure 6. …Even as Short Form Continues to Grow

Sources: Activate analysis, eMarketer, GWI, Nielsen, Pew Research Center, U.S. Bureau of Labor Statistics.

The technology that enabled the disruption of video distribution was, of course, “the Internet” (which is really a suite of technologies). Below, I discuss four enabling technologies that could blur the quality distinction between short form and long form content and similarly disrupt video content creation over the next decade.

These are not concepts or theories, they are all happening today. Individually, none of them may seem very transformative and some are earlier than others. But, as you read through them, think about what effect they may have collectively. Also, think about how they will improve. For the most part, these technologies are gated by shifting consumer behavior, the sophistication of algorithms, the size of datasets and compute power — all things that have the potential to progress very fast and in unpredictable ways.

The effects could be more profound than what’s happened over the prior decade. I discuss them in order of immediacy.

TikTok, YouTube and the Changing Consumer Definition of Content Quality

Let’s start with the most present threat: short form.

As mentioned above, short form is massive. As also mentioned, it is not generally regarded as a direct threat to traditional long form video. Short form is thought of as a “different thing” than TV and especially movies, initiated when people don’t want (or intend) to commit to a 30 minute-or-longer show (like when procrastinating, on the train, waiting in line or just in need of a quick dopamine hit).

The chief risk from TikTok is that it changes the consumer definition of quality and lowers the bar.

One of the most insidious and least understood parts of Christensen’s disruption process, referenced above, is that sometimes new entrants change consumers’ definition of quality. It’s so dangerous because executives tend to get rooted in one definition of quality, but consumers’ definitions are constantly evolving.

Executives get rooted in one definition of quality, but consumers’ definitions are always evolving.

By quality, I don’t mean craftsmanship, I mean the combination — and relative weighting — of attributes that one considers when choosing between similar goods or services for an intended use. Under this definition, revealed preference definitionally reveals quality preference. If someone is choosing between two identically priced Gucci and Louis Vuitton purses and says “I think the Louis Vuitton is better made, but I’m buying the Gucci because it’s trendier,” that means they actually think the Gucci is higher quality because their internal quality algorithm values trendiness more than craftsmanship. Importantly, this doesn’t mean that craftsmanship doesn’t matter at all, it just means that its relative importance is lower.

Disruption often changes consumers’ definition of quality. Think about how AirBNB has changed the definition of quality in lodging. Cleanliness, location and customer service are all still important attributes of “quality,” but for some people there are now new attributes, like a full kitchen, much more space or a quiet neighborhood. In TV, Netflix ingrained new measures of quality too. The emotional effect of the content is still important (surprising, exciting, dramatic, funny, etc.), but now new attributes are also important, like having all the episodes available on demand or being ad-free, among other things.

Most studio executives equate TV and movie quality with very high-cost attributes: high production values; established, well-known IP; brand name directors, show-runners, actors and screenwriters; and expensive effects, often signaled by equally expensive marketing campaigns. Short form doesn’t (currently) compete on these attributes. But it ranks much higher on other attributes, like virality, surprise, digestibility, relevance to my community and personalization. These attributes are not inherently expensive.

By introducing new measure of quality, like virality, digestibility or personalization, TikTok and YouTube are causing some consumers to de-emphasize costly high production values.

To the extent that consumers consciously substitute short form for traditional TV, this reveals that their definition of quality is shifting toward de-emphasizing high-cost attributes, and, in the process, lowering the barrier to entry. It seems like this is what’s starting to happen. According to TikTok, as of March 2021, 35% of users were consciously — and therefore intentionally — watching less TV since they started using TikTok.

To the extent that short form doesn’t really compete with TV and movies, it isn’t a threat. But if short form is reducing the importance of the traditional, expensive markers of content quality and the production value of this content also goes up, then it is.

How will the production value of short form go up? Let’s keep moving.

Virtual Production and Falling Production Costs

Virtual production is an emerging film and TV production process that promises to greatly increase efficiency and flexibility. But it is a double-edged sword: it may both lower production costs for incumbent studios and entry barriers to create quality video content.

The Traditional Production Process is Linear

To understand the significance of virtual production, you must start with the traditional TV or film production process. Simplistically, it proceeds in distinct, linear phases: from pre-production (storyboarding, casting, refining the script, scouting locations) to production (principal photography) and finally to post-production (editing and visual effects (VFX)). VFX involves adding elements to the film that weren’t there during shooting, most of which today is computer generated imagery (CGI or often just CG). Below is one of those fun clips showing how foolish actors look emoting in front of a green screen, contrasted against the final cut. (The first 30 seconds is enough.)

Virtual Production is Continuous and Iterative

Virtual production (VP) uses technology to enable greater collaboration and iteration between the traditional phases of production (and blurs the boundaries between them). Key enabling technologies are massive increases in computing power and real-time 3D rendering engines, namely Epic’s Unreal Engine (UE), Unity and Nvidia Omniverse, which have quickly emerged as industry standards.

The idea is that every visual element within a frame, whether physical or virtual — characters, objects and backgrounds — is a digital asset that can be adjusted in real time (lighting, positioning, framing). Among other benefits, the cast and crew can see each shot essentially as it will look “final pixel,” as opposed to looking at a green screen. Importantly, the digital assets created during this process can be repurposed in sequels, prequels or other productions and even ported to “non-linear” experiences, like gaming, VR/AR or virtual worlds.

Use Cases: Progressing From Hybrid Live Action to Fully Digital

Right now, VP is being used primarily to augment the live action production process, but the arc is toward all-digital productions over time.

Hybrid digital/live action. The current state-of-the-art is the use of LED screens that wrap around a soundstage, including the ceiling, called a “volume,” which depicts the set as it will look on screen. It also obviates the need to travel to different locations, worry about weather or squeeze in a shoot during fleeting lighting conditions. In this case, a video is worth a million words; watch this explanation of the use of VP during the shooting of The Mandalorian. The first couple of minutes make the point.

The upfront cost of building a volume is still very high, the workflows are still new and bumpy and filmmakers/showrunners have to embrace it, but VP promises to reduce production costs for a number of reasons: more efficient shooting schedules (i.e., the ability to get through more pages per day and reduce the time required of actors); no location and travel costs; the ability to re-use assets and sets on other productions; elimination of re-shoots, which can sometimes account for 5–10% in cost overruns; and less time in post production.

It’s hard to get at the potential cost savings from VP, but some estimates peg them at 30–40% of production cost, or more. Some of these savings may end up on the screen, as directors use the technology to expand the scope of their productions. But more bang for the buck is good either way.

VP can cut production costs for hybrid digital/live action projects by 30–40%.

Sounds pretty good. But turning our attention next to fully digital productions gives a sense of where the technology is headed.

Fully digital. The frontier in VP is productions that are fully digital, meaning there is no set at all. In this case, all the assets and even people are created digitally and the entire production occurs within the engine. (Although the characters’ movement and facial expressions may be mapped to motion capture hardware worn by real actors and their voices are also likely real, at least for now.)

This behind-the-scenes description of a Netflix short produced using real-time rendering is, again, worth a lot of words.

Importantly, all of the people in this short are actually MetaHumans, Unreal Engine’s photorealistic digital humans. Creators can use (and alter) dozens of pre-stocked MetaHumans or create custom MetaHumans using scans, as was done for this short. Unity’s digital humans are even more impressive (watch from about the 1:30 mark below or just look at the image to get the point).

Keep in mind that the quality of rendering is gated by compute power. As GPUs get more powerful (and/or UE and Unity support multiple simultaneous GPUs, as Omniverse already does), these digital humans will become progressively indistinguishable from real people.

Here’s another video, The Matrix Awakens demo created by Warner Bros. and Epic. The video is long, but worth watching in its entirety. The keys here are severalfold: 1) this video was rendered real-time in UE5 on a PS5 and XBox Series X; 2) it is very difficult to distinguish between which of these characters are real and which aren’t, but everything from about the 2-minute mark on was created in the engine — every car, building, street, lamppost, mailbox and person, even Keanu Reeves and Carrie Ann Moss (albeit mapped to motion capture output); and 3) the transition between the linear story and the gameplay is seamless.

Real time rendering is a very powerful tool that may fundamentally change the cost structure of making high-quality filmed entertainment. But to get a real sense of the potential, it’s helpful to layer on the next piece, AI.

AI and Even Faster Falling Costs

AI is clearly having its Cambrian moment and generative AI, in particular, is rightfully getting a lot of attention. The prospect of art created with little or no human involvement is deeply unsettling to a lot of people, including me. The near-term relevance of AI (including generative AI), however, is not that it will replace human creativity, but that it may greatly increase the efficiency of the production process.

Here and Now

Although it has been overshadowed by the excitement around DALL-E 2, Midjourney, ChatGPT, etc., there has also been a quieter wave of AI content production technologies and tools over the last year or two (some of which you would also call “generative”). Here is a highly incomplete list:

RunwayML, which uses AI to erase objects in video, isolate different elements in the video (rotoscoping) and even generate backgrounds with a simple text prompt. Again, a video is better than a description.

DreamFusion from Google and Magic3D from Nvidia, which are text-to-3D models models (say that five times fast). Type in “a blue poison-dart frog sitting on a water lily” and Magic3D produces a 3D mesh model that can be used in other modeling software or rendering engines.

Neural Radiance Field (NeRF) technology, which enables the creation of photorealistic 3D environments from 2D images. See the short demo of Nvidia’s Instant NeRF below or check out Luma AI.

AI-based motion capture software, such as DeepMotion and OpenPose, which convert 2D video into 3D animation without traditional motion capture hardware.

There has been academic research on AI-based auto-rigging, which would automatically determine how digital characters move based on their anatomy.

There are also several enterprise applications, like Synthesia.io, which provide AI avatars that will speak whatever text is provided and even offers customized avatars. Send in a few facial scans, and it will send back an avatar of the subject that can then be used to deliver any written text, in any language.

Deepdub.ai, which uses AI to dub audio into any language, using the original actor’s voice.

Lastly, do yourself a favor and go to thispersondoesnotexist.com and hit refresh a few times. None of these very real looking people are real.

The Near Future

Many of these tools are clearly imperfect. The avatar from Synthesia definitely falls into that off putting uncanny valley. Perhaps the 2D motion capture doesn’t seem that crisp. But, here’s the thing: all of this will keep getting better, very quickly. As mentioned above, the gating factors for improvement in all these tools is the size of datasets, the sophistication of algorithms and compute power, all of which are advancing fast.

Real-time rendering engines and AI-enhanced tools make it plausible that very small teams can create very high quality productions.

The trajectory here is clear: combining real-time rendering engines and these kinds of AI tools will make it possible for smaller teams, working with relatively small budgets, to create very high quality output. The average TV show requires ~100–200 cast and crew in a season and some a lot more than that. In its first season, for instance, House of the Dragon lists 1,875 people in the cast and crew, including over 600 in visual effects. What if eventually comparable quality could be achieved with half, or one-third or one-fifth as many people?

The timing for different content genres to shift a larger proportion of production into VP will likely depend on consumers’ expectations for video fidelity and the importance of effects vs. acting.

Animation will be first. Traditionally, the workflow in animation is also sequential, similar to live action: storyboarding; 3D modeling; rigging (determining how characters move); layouts; animation; shading and texturing; lighting; and finally, rendering (pulling all of that work together by setting the color of each individual pixel in each individual frame). Rendering is especially time consuming and expensive. Consider a 90-minute movie. With 24 frames per second, that’s ~130,000 frames, each of which takes many hours to render. (Every frame in this scene from Luca took 50 hours to render.) This is performed in render farms and even though many frames are rendered simultaneously, it can take days or weeks to come back. Any adjustments will need to be rendered again. Taking the entire process into account, most Pixar films take 4–7 years to complete and include a cast and crew of 500+.

By contrast, using VP, teams can be smaller, since artists can wear more hats, and it becomes relatively trivial to make adjustments, including lighting, colors and perspective, on the fly. (To be clear, 3D engines are not producing photorealistic renders in real time today, so the final frames will still likely need to go out for offline rendering. But the key is that real-time rendering allows experimentation and iteration on the fly. And it will continue to improve.) Spire, a new animation studio co-founded by Brad Lewis, producer of Ratatouille, is currently working on a full-length feature created entirely in UE, called Trouble.

CG-intensive live action films are probably next. As you can see in the behind-the-scenes video I embedded above about The Mandalorian, even though few of them look human, there are still a lot actors walking around the volume. Over time, a growing proportion of the footage in these kinds of series and films will likely be produced without actors, other than motion capture. Eventually, even that may be unnecessary. When you watch the Mandalorian walk around in his helmet, Thanos snap his fingers or the Na’vi swim with whales, it raises the question of whether you will need humans in these kinds of series and films at all in five years.

MetaMeryl? What about a drama or romance with a lot of nuanced acting? It might take awhile before you could or would even want to supplant Meryl Streep with a MetaHuman. The savings might not be worth it. But will it eventually be technically possible to do a series of facial scans of an actor, then have him voiceover the entire script and have his corresponding MetaHuman do all the “acting,” where the director could manipulate his gestures and facial expressions to get the precise take she wants? For that matter, will it eventually be possible to train an AI on the footage of every Angelina Jolie movie ever, including her voice and facial expressions, license her likeness, and then create a new film starring a 28-year Angelina Jolie, starring opposite a 32-year old Paul Newman (also licensed), all in the Unreal Engine? The way things are headed, it probably will.

Web3 and a New Financing Model

This is the last piece of the puzzle: financing.

As mentioned before, producing TV and movies has a high barrier to entry not just because it is expensive, but because it is risky. Returns exhibit power law dynamics, meaning they are highly variable. The investment is also front loaded, since you need to spend a lot of money to create an entertainment asset and then a lot of money to market it before you find out if an audience will even show up.

Contrary to popular belief (and with all due respect to the development people that have the vision to option the right projects), movie studios don’t make movies; they attract the talent that makes movies. And they attract this talent in large part by absorbing risk. But web3 may reduce the need for studios to absorb risk.

Movie studios don’t make movies, they attract the talent that makes movies — in large part by absorbing risk.

Crowdfunding on Steroids

It’s a tough time to be a crypto bull. But whether you are a firm believer that there is unique utility, and inevitability, of the decentralized Internet or complete skeptic, here’s the concept: web3, by which I simply mean applications that are facilitated by the combination of public blockchains and tokens, enables what you could call “crowdfunding on steroids.”

Crowdfunding content isn’t new. It’s been done for years on Kickstarter and Indiegogo. The highest profile example is the reboot of Veronica Mars, which raised $5.7 million on Kickstarter from 90,000 fans for a new film, seven years after the series went off the air. For the most part, these campaigns only work for established IP with a large pre-existing fan base. They also usually are positioned as donations, not investments, or offer trivial incentives, like merchandise, autographs, movie tickets or DVDs, not profit participation or any governance rights.

The combination of tokens and public blockchains provides several benefits:

Governance and other perks. Tokens can be structured such that token holders (or holders of specific classes of tokens) can vote on significant decisions (including the direction of storyline itself, sort of a communal “choose-your-own-adventure”). They can also provide token-gated perks, such as member-only Discord servers, or early or exclusive access to content and merchandise.

Graduated financing. As mentioned above, the typical model for many traditional content projects is to invest tens of millions in production and tens of millions more in marketing before finding out if anyone’s interested. Web3 projects enable creators to build community first (such as through initial NFT projects) and use subsequent NFT sales to fund additional content projects.

Web3 inverts the traditional risk profile of content production; rather than spend heavily to build IP and then try to find an audience, it builds the community first and then develops the IP.

Social signaling. The tokens themselves, which can be showcased publicly, may provide social currency. For instance, the early backers of a project can display their tokens as proof-of-fandom.

Economic participation with liquidity. People are fans because they are passionate about something. Tokens can supercharge that fandom by providing something new: an economic incentive. Tokens can (theoretically) be structured with direct profit participation rights or fractionalized IP ownership. Or tokens may simply be limited collectibles that will likely rise in value if the associated IP succeeds. And they are liquid. An economic incentive will likely turn fans into even more ardent evangelizers.

A Few Examples

There are enough examples of blockchain-based, community-driven film and TV development that it has earned its own moniker, Film3. Here are a couple of the highest-profile examples:

Aku World revolves around Aku, a young Black boy who wants to be an astronaut. Aku was the first NFT project that was optioned for a film and TV project and the founder reportedly intends to give the community input into the future development of the IP.

Jenkins the Valet is the name and persona that the owner of a Bored Ape Yacht Club (BAYC) NFT assigned to his ape, which he developed by writing stories about Jenkins’ exploits. Jenkins has signed with CAA, with the intention to develop other media properties, including film and TV.

Shibuya is a platform for creating and publishing video content, which enables creators to provide governance rights and direct IP ownership to fans. Its first project is White Rabbit; fans can vote on the plot development of each chapter and, when completed, ownership will be converted into a fractionalized NFT. Last week it raised $7 million, led by a16z and Variant.

HollywoodDAO, StoryDAO and Film.io are all decentralized autonomous organizations (DAOs), among many, that include some combination of community creation, governance and ownership.

A Rough Cut of the Implications of Falling Production Costs

If you went back 15 years ago and tried to predict the implications of the disruption of video distribution, you probably wouldn’t have pieced together what’s happened since. It’s mind boggling to think about what may happen if content production follows a similar path. But here are some first order (and obvious) effects:

Every aspect of the TV and film business will be affected. Given all the dislocation that has occurred from the disruption of the distribution model, disruption of the content creation model would probably result in an industry that looks almost nothing like it does today.

There will be a lot more “high quality” content and hits will emerge from the tail. The vast majority of short form is crap. If the average quality of this tonnage lifts, however, and even a tiny percentage breaks through, it could meaningfully increase the supply of what we currently consider quality video content.

Think about it this way. Today, there are relatively few companies in Hollywood that make the vast majority of TV series and films and there are relatively few people at these companies that work in development and even fewer that make greenlight decisions. How many? Maybe 100, 200 max. Is it likely that this small group of people collectively has greater creative intuition than an almost infinite number of potential creators?

This is already what occurs in music. It was recently announced that 100,000 tracks are uploaded to streaming music services each day, the overwhelming majority of which get no traction. But almost all of the new breakout acts of the last few years — like The Weeknd, Billie Eilish, Lil Uzi Vert, XXXTentacion, Bad Bunny, Post Malone, Migos and many more — emerged from the tail of self-distributed content, not from A&R reps hanging around at 2AM for the last act.

There will be far more diverse content. If it sometimes feels like every TV show and movie is a reboot, prequel, sequel, spinoff or adaptation of established IP, that’s because a growing proportion are. This article shows the data for TV and movies; Ampere Analysis also recently reported that 64% of new SVOD originals in the first half of 2022 were based on existing IP. This reliance on established IP is an understandable risk mitigation tactic by the studios, especially as the costs of content and the stakes for delivering hits rise. If the trends I described above continue to play out, studios may become more risk averse and lean even more heavily on established IP. The collective tail will be much more willing to take creative risk and experiment with new stories, formats and experiences. It will also, by definition, have much more diverse creators.

Curation will become even more important. As I wrote about here, value flows toward scarce resources and truly disruptive technologies tend to change which resources are scarce and which are abundant. Prior to the advent of the Internet, content was relatively scarce because there were high barriers to entry to distribute it (such as the need to lay fiber and coax, own scarce local spectrum licenses or build printing facilities). There wasn’t much to curate, so curation — like local TV listings, TV Guide or Reader’s Digest — was “abundant” and extracted little value. The Internet flipped this dynamic, making content abundant and curation scarce and valuable.

There is no better example than the news business, where the barriers to entry to create content were always low. Once distribution barriers also fell, there was an explosion of “news” content (from bloggers, independent journalists, the Twitterati, local and regional newspapers distributing globally and digital native news organizations) and the bulk of the value created by news content is actually extracted by the curators/aggregators of news (Google, Meta, Apple News, Twitter, etc.), not news organizations.

In long form video, this value shift hasn’t occurred because even after distribution barriers fell, content creation barriers remained high. A similar explosion of quality video content would cause value to shift to curation, as consumers find it exponentially harder to wade through all their choices and become less reliant on only a handful of big content creators/distributors.

A new way of creating content may enable (and necessitate) a new way to monetize it. Of course, the degree to which costs will fall is both critically important and unknowable. If it becomes possible to create a Pixar-quality film with half the team, half the budget and half the time, what happens then? Maybe not that much changes. It probably gets financed independently, picked up by Netflix and distributed (and monetized) like everything else. What if costs fall 75%? 90%? What if you could make a high quality TV series for $500,000 an episode, not $5 million? $50,000? Two friends in a dorm room?

As costs fall, new monetization models become possible. Maybe ad revenue is enough? Perhaps single sponsors (as we head back to the days of soap operas) or product placements? Perhaps microtransactions? Maybe fractionalized NFTs, where the creators get paid by retaining a significant portion of the tokens? Maybe abundant, free high quality video content becomes top-of-funnel for some other forms of monetization for the most committed fans (free-to-watch)?

Counterintuitively, the most expensive content may be affected soonest. As mentioned above, one of the content genres that will benefit soonest from the combination of VP and AI is CG-heavy live action films and series. These are also the most expensive productions (look again at Figure 1). The good news for studios is that these tools could meaningfully reduce production costs for these kinds of projects. The bad news is that they may also lower entry barriers for their highest-value content.

The most valuable franchises may become even more valuable. With new tools and lower costs, many creators will want to dream up entirely new stories. A lot will also probably want to expand on their favorite fictional worlds, whether Harry Potter, the MCU or Game of Thrones — or create mash-ups between them. Historically, Hollywood has guarded its IP closely and has been more inclined to view fanfiction as copyright infringement than enhancement. But progressive rights owners would be wise to harness all the potential creative energy, not stifle it.

Last embed, I promise. This video shows a small team — actually, it is mostly one guy — using AI tools to create his own version of the animated Spiderman: Into the Spiderverse, incorporating other live action footage from MCU films. The video is long, but if you watch the first few minutes and then the movie he put together (which starts at about the 19:45 mark), you get the point. It exemplifies a lot of of what I’ve discussed above.

The Good News? It’s Early

What should studios do? That probably requires another essay, but a few things come to mind:

Embrace the technology. The big media companies’ current predicament could be summarized this way: the tech companies became media companies before the media companies could become tech companies. Hollywood has a very spotty record with new technologies. It doesn’t embrace them, it goes through something like the five stages of grief: denial, dismissal, resistance (often through legal means), “innovation theater” (as they go through the motions of embracing a new technology, but really don’t) and capitulation. Hollywood should embrace VP and AI to capitalize both on the greater cost efficiency and the optionality of having every visual element warehoused as a reusable, extensible digital asset.

Put differently, the trends I described above may be inevitable, but disruption is not. Disruption describes a process by which incumbents ignore a threat until it is too late. That doesn’t mean the incumbents have to repeat this pattern.

Lean into fanfiction. As mentioned above, with a democratization of high quality production tools, many independent creators will want to expand on their favorite IP, especially those with rich, well developed worlds. Rather than resist, IP holders should think of their IP similarly to the music industry. Perhaps a framework will emerge similar to “publishing rights,” that enable video IP rights owners to monetize third-party exploitation of their work?

Look to the labels. Historically, the music labels controlled every aspect of the business, including A&R, artist development, production, distribution and marketing. Today, many of those roles have been supplanted by technology. Anyone can set up a recording studio in their bedroom; anyone can self-distribute on streaming services; and artists market through their social followings. But labels have maintained their primacy, in large part by helping artists negotiate the incredible complexity of the business and leveraging the bargaining power of their artist rosters and deep libraries. The analogy is imperfect (for instance, library is a lot more important in music than video, giving the labels a lot of bargaining leverage), but the labels provide a hopeful model for how to pivot.

With all the hand wringing about streaming economics, the dynamics I described above aren’t top of mind yet for media executives. The good news is that it’s still early.